Summary:

Add Github Action to perform some basic sanity check for PR, inclding the

following.

1) Buck TARGETS file.

On the one hand, The TARGETS file is used for internal buck, and we do not

manually update it. On the other hand, we need to run the buckifier scripts to

update TARGETS whenever new files are added, etc. With this Github Action, we

make sure that every PR does not forget this step. The GH Action uses

a Makefile target called check-buck-targets. Users can manually run `make

check-buck-targets` on local machine.

2) Code format

We use clang-format-diff.py to format our code. The GH Action in this PR makes

sure this step is not skipped. This GH Action does not use the Makefile target

called "make format" because "make format" currently runs

build_tools/format-diff.sh which requires clang-format-diff.py to be

executable. The clang-format-diff.py on our dev servers are not python3

compatible either.

On host running GH Action, it is difficult to download a file and make it

executable. Modifying build_tools/format-diff.sh is less necessary, especially

since the command we actually use for format checking is quite simple, as

illustrated in the workflow definition.

Test Plan:

Watch for Github Action result.

Summary:

In https://github.com/facebook/rocksdb/pull/6455, we modified the interface of `RandomAccessFileReader::Read` to be able to get rid of memcpy in direct IO mode.

This PR applies the new interface to `BlockFetcher` when reading blocks from SST files in direct IO mode.

Without this PR, in direct IO mode, when fetching and uncompressing compressed blocks, `BlockFetcher` will first copy the raw compressed block into `BlockFetcher::compressed_buf_` or `BlockFetcher::stack_buf_` inside `RandomAccessFileReader::Read` depending on the block size. then during uncompressing, it will copy the uncompressed block into `BlockFetcher::heap_buf_`.

In this PR, we get rid of the first memcpy and directly uncompress the block from `direct_io_buf_` to `heap_buf_`.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6689

Test Plan: A new unit test `block_fetcher_test` is added.

Reviewed By: anand1976

Differential Revision: D21006729

Pulled By: cheng-chang

fbshipit-source-id: 2370b92c24075692423b81277415feb2aed5d980

Summary:

Updates the version of bzip2 used for RocksJava static builds.

Please, can we also get this cherry-picked to:

1. 6.7.fb

2. 6.8.fb

3. 6.9.fb

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6714

Reviewed By: cheng-chang

Differential Revision: D21067233

Pulled By: pdillinger

fbshipit-source-id: 8164b7eb99c5ca7b2021ab8c371ba9ded4cb4f7e

Summary:

This PR implements a fault injection mechanism for injecting errors in reads in db_stress. The FaultInjectionTestFS is used for this purpose. A thread local structure is used to track the errors, so that each db_stress thread can independently enable/disable error injection and verify observed errors against expected errors. This is initially enabled only for Get and MultiGet, but can be extended to iterator as well once its proven stable.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6538

Test Plan:

crash_test

make check

Reviewed By: riversand963

Differential Revision: D20714347

Pulled By: anand1976

fbshipit-source-id: d7598321d4a2d72bda0ced57411a337a91d87dc7

Summary:

Towards making compaction logic compatible with user timestamp.

When computing boundaries and overlapping ranges for inputs of compaction, We need to compare SSTs by user key without timestamp.

Test plan (devserver):

```

make check

```

Several individual tests:

```

./version_set_test --gtest_filter=VersionStorageInfoTimestampTest.GetOverlappingInputs

./db_with_timestamp_compaction_test

./db_with_timestamp_basic_test

```

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6645

Reviewed By: ltamasi

Differential Revision: D20960012

Pulled By: riversand963

fbshipit-source-id: ad377fa9eb481bf7a8a3e1824aaade48cdc653a4

Summary:

New memory technologies are being developed by various hardware vendors (Intel DCPMM is one such technology currently available). These new memory types require different libraries for allocation and management (such as PMDK and memkind). The high capacities available make it possible to provision large caches (up to several TBs in size), beyond what is achievable with DRAM.

The new allocator provided in this PR uses the memkind library to allocate memory on different media.

**Performance**

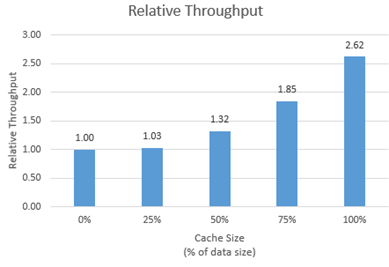

We tested the new allocator using db_bench.

- For each test, we vary the size of the block cache (relative to the size of the uncompressed data in the database).

- The database is filled sequentially. Throughput is then measured with a readrandom benchmark.

- We use a uniform distribution as a worst-case scenario.

The plot shows throughput (ops/s) relative to a configuration with no block cache and default allocator.

For all tests, p99 latency is below 500 us.

**Changes**

- Add MemkindKmemAllocator

- Add --use_cache_memkind_kmem_allocator db_bench option (to create an LRU block cache with the new allocator)

- Add detection of memkind library with KMEM DAX support

- Add test for MemkindKmemAllocator

**Minimum Requirements**

- kernel 5.3.12

- ndctl v67 - https://github.com/pmem/ndctl

- memkind v1.10.0 - https://github.com/memkind/memkind

**Memory Configuration**

The allocator uses the MEMKIND_DAX_KMEM memory kind. Follow the instructions on[ memkind’s GitHub page](https://github.com/memkind/memkind) to set up NVDIMM memory accordingly.

Note on memory allocation with NVDIMM memory exposed as system memory.

- The MemkindKmemAllocator will only allocate from NVDIMM memory (using memkind_malloc with MEMKIND_DAX_KMEM kind).

- The default allocator is not restricted to RAM by default. Based on NUMA node latency, the kernel should allocate from local RAM preferentially, but it’s a kernel decision. numactl --preferred/--membind can be used to allocate preferentially/exclusively from the local RAM node.

**Usage**

When creating an LRU cache, pass a MemkindKmemAllocator object as argument.

For example (replace capacity with the desired value in bytes):

```

#include "rocksdb/cache.h"

#include "memory/memkind_kmem_allocator.h"

NewLRUCache(

capacity /*size_t*/,

6 /*cache_numshardbits*/,

false /*strict_capacity_limit*/,

false /*cache_high_pri_pool_ratio*/,

std::make_shared<MemkindKmemAllocator>());

```

Refer to [RocksDB’s block cache documentation](https://github.com/facebook/rocksdb/wiki/Block-Cache) to assign the LRU cache as block cache for a database.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6214

Reviewed By: cheng-chang

Differential Revision: D19292435

fbshipit-source-id: 7202f47b769e7722b539c86c2ffd669f64d7b4e1

Summary:

Although there are tests related to locking in transaction_test, this new test directly tests against TransactionLockMgr.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6599

Test Plan: make transaction_lock_mgr_test && ./transaction_lock_mgr_test

Reviewed By: lth

Differential Revision: D20673749

Pulled By: cheng-chang

fbshipit-source-id: 1fa4a13218e68d785f5a99924556751a8c5c0f31

Summary:

Adding a simple timer support to schedule work at a fixed time.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6543

Test Plan: TODO: clean up the unit tests, and make them better.

Reviewed By: siying

Differential Revision: D20465390

Pulled By: sagar0

fbshipit-source-id: cba143f70b6339863e1d0f8b8bf92e51c2b3d678

Summary:

When Travis times out, it's hard to determine whether

the last executing thing took an excessively long time or the

sum of all the work just exceeded the time limit. This

change inserts some timestamps in the output that should

make this easier to determine.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6643

Test Plan: CI (Travis mostly)

Reviewed By: anand1976

Differential Revision: D20843901

Pulled By: pdillinger

fbshipit-source-id: e7aae5434b0c609931feddf238ce4355964488b7

Summary:

This PR adds support for pipelined & parallel compression optimization for `BlockBasedTableBuilder`. This optimization makes block building, block compression and block appending a pipeline, and uses multiple threads to accelerate block compression. Users can set `CompressionOptions::parallel_threads` greater than 1 to enable compression parallelism.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6262

Reviewed By: ajkr

Differential Revision: D20651306

fbshipit-source-id: 62125590a9c15b6d9071def9dc72589c1696a4cb

Summary:

By supporting direct IO in RandomAccessFileReader::MultiRead, the benefits of parallel IO (IO uring) and direct IO can be combined.

In direct IO mode, read requests are aligned and merged together before being issued to RandomAccessFile::MultiRead, so blocks in the original requests might share the same underlying buffer, the shared buffers are returned in `aligned_bufs`, which is a new parameter of the `MultiRead` API.

For example, suppose alignment requirement for direct IO is 4KB, one request is (offset: 1KB, len: 1KB), another request is (offset: 3KB, len: 1KB), then since they all belong to page (offset: 0, len: 4KB), `MultiRead` only reads the page with direct IO into a buffer on heap, and returns 2 Slices referencing regions in that same buffer. See `random_access_file_reader_test.cc` for more examples.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6446

Test Plan: Added a new test `random_access_file_reader_test.cc`.

Reviewed By: anand1976

Differential Revision: D20097518

Pulled By: cheng-chang

fbshipit-source-id: ca48a8faf9c3af146465c102ef6b266a363e78d1

Summary:

In some of the test, db_basic_test may cause time out due to its long running time. Separate the timestamp related test from db_basic_test to avoid the potential issue.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6516

Test Plan: pass make asan_check

Differential Revision: D20423922

Pulled By: zhichao-cao

fbshipit-source-id: d6306f89a8de55b07bf57233e4554c09ef1fe23a

Summary:

In the `.travis.yml` file the `jdk: openjdk7` element is ignored when `language: cpp`. So whatever version of the JDK that was installed in the Travis container was used - typically JDK 11.

To ensure our RocksJava builds are working, we now instead install and use OpenJDK 8. Ideally we would use OpenJDK 7, as RocksJava supports Java 7, but many of the newer Travis containers don't support Java 7, so Java 8 is the next best thing.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6512

Differential Revision: D20388296

Pulled By: pdillinger

fbshipit-source-id: 8bbe6b59b70cfab7fe81ff63867d907fefdd2df1

Summary:

In Linux, when reopening DB with many SST files, profiling shows that 100% system cpu time spent for a couple of seconds for `GetLogicalBufferSize`. This slows down MyRocks' recovery time when site is down.

This PR introduces two new APIs:

1. `Env::RegisterDbPaths` and `Env::UnregisterDbPaths` lets `DB` tell the env when it starts or stops using its database directories . The `PosixFileSystem` takes this opportunity to set up a cache from database directories to the corresponding logical block sizes.

2. `LogicalBlockSizeCache` is defined only for OS_LINUX to cache the logical block sizes.

Other modifications:

1. rename `logical buffer size` to `logical block size` to be consistent with Linux terms.

2. declare `GetLogicalBlockSize` in `PosixHelper` to expose it to `PosixFileSystem`.

3. change the functions `IOError` and `IOStatus` in `env/io_posix.h` to have external linkage since they are used in other translation units too.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6457

Test Plan:

1. A new unit test is added for `LogicalBlockSizeCache` in `env/io_posix_test.cc`.

2. A new integration test is added for `DB` operations related to the cache in `db/db_logical_block_size_cache_test.cc`.

`make check`

Differential Revision: D20131243

Pulled By: cheng-chang

fbshipit-source-id: 3077c50f8065c0bffb544d8f49fb10bba9408d04

Summary:

* **macOS version:** 10.15.2 (Catalina)

* **XCode/Clang version:** Apple clang version 11.0.0 (clang-1100.0.33.16)

Before this bugfix the error generated is:

```

In file included from ./util/compression.h:23:

./snappy-1.1.7/snappy.h:76:59: error: unknown type name 'string'; did you mean 'std::string'?

size_t Compress(const char* input, size_t input_length, string* output);

^~~~~~

std::string

/Library/Developer/CommandLineTools/usr/bin/../include/c++/v1/iosfwd:211:65: note: 'std::string' declared here

typedef basic_string<char, char_traits<char>, allocator<char> > string;

^

In file included from db/builder.cc:10:

In file included from ./db/builder.h:12:

In file included from ./db/range_tombstone_fragmenter.h:15:

In file included from ./db/pinned_iterators_manager.h:12:

In file included from ./table/internal_iterator.h:13:

In file included from ./table/format.h:25:

In file included from ./options/cf_options.h:14:

In file included from ./util/compression.h:23:

./snappy-1.1.7/snappy.h:85:19: error: unknown type name 'string'; did you mean 'std::string'?

string* uncompressed);

^~~~~~

std::string

/Library/Developer/CommandLineTools/usr/bin/../include/c++/v1/iosfwd:211:65: note: 'std::string' declared here

typedef basic_string<char, char_traits<char>, allocator<char> > string;

^

2 errors generated.

make: *** [jls/db/builder.o] Error 1

```

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6496

Differential Revision: D20389254

Pulled By: pdillinger

fbshipit-source-id: 2864245c8d0dba7b2ab81294241a62f2adf02e20

Summary:

It's never too soon to refactor something. The patch splits the recently

introduced (`VersionEdit` related) `BlobFileState` into two classes

`BlobFileAddition` and `BlobFileGarbage`. The idea is that once blob files

are closed, they are immutable, and the only thing that changes is the

amount of garbage in them. In the new design, `BlobFileAddition` contains

the immutable attributes (currently, the count and total size of all blobs, checksum

method, and checksum value), while `BlobFileGarbage` contains the mutable

GC-related information elements (count and total size of garbage blobs). This is a

better fit for the GC logic and is more consistent with how SST files are handled.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6502

Test Plan: `make check`

Differential Revision: D20348352

Pulled By: ltamasi

fbshipit-source-id: ff93f0121e80ab15e0e0a6525ba0d6af16a0e008

Summary:

In the current code base, we can use FaultInjectionTestEnv to simulate the env issue such as file write/read errors, which are used in most of the test. The PR https://github.com/facebook/rocksdb/issues/5761 introduce the File System as a new Env API. This PR implement the FaultInjectionTestFS, which can be used to simulate when File System has issues such as IO error. user can specify any IOStatus error as input, such that FS corresponding actions will return certain error to the caller.

A set of ErrorHandlerFSTests are introduced for testing

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6414

Test Plan: pass make asan_check, pass error_handler_fs_test.

Differential Revision: D20252421

Pulled By: zhichao-cao

fbshipit-source-id: e922038f8ce7e6d1da329fd0bba7283c4b779a21

Summary:

BlobDB currently does not keep track of blob files: no records are written to

the manifest when a blob file is added or removed, and upon opening a database,

the list of blob files is populated simply based on the contents of the blob directory.

This means that lost blob files cannot be detected at the moment. We plan to solve

this issue by making blob files a part of `Version`; as a first step, this patch makes

it possible to store information about blob files in `VersionEdit`. Currently, this information

includes blob file number, total number and size of all blobs, and total number and size

of garbage blobs. However, the format is extensible: new fields can be added in

both a forward compatible and a forward incompatible manner if needed (similarly

to `kNewFile4`).

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6416

Test Plan: `make check`

Differential Revision: D19894234

Pulled By: ltamasi

fbshipit-source-id: f9753e1f2aedf6dadb70c09b345207cb9c58c329

Summary:

Add a utility class `Defer` to defer the execution of a function until the Defer object goes out of scope.

Used in VersionSet:: ProcessManifestWrites as an example.

The inline comments for class `Defer` have more details.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6382

Test Plan: `make defer_test version_set_test && ./defer_test && ./version_set_test`

Differential Revision: D19797538

Pulled By: cheng-chang

fbshipit-source-id: b1a9b7306e4fd4f48ec2ab55783caa561a315f0f

Summary:

It's logically correct for PinnableSlice to support move semantics to transfer ownership of the pinned memory region. This PR adds both move constructor and move assignment to PinnableSlice.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6374

Test Plan:

A set of unit tests for the move semantics are added in slice_test.

So `make slice_test && ./slice_test`.

Differential Revision: D19739254

Pulled By: cheng-chang

fbshipit-source-id: f898bd811bb05b2d87384ec58b645e9915e8e0b1

Summary:

I set up a mirror of our Java deps on github so we can download

them through github URLs rather than maven.org, which is proving

terribly unreliable from Travis builds.

Also sanitized calls to curl, so they are easier to read and

appropriately fail on download failure.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6348

Test Plan: CI

Differential Revision: D19633621

Pulled By: pdillinger

fbshipit-source-id: 7eb3f730953db2ead758dc94039c040f406790f3

Summary:

Both changes are related to RocksJava:

1. Allow dependencies that are already present on the host system due to Maven to be reused in Docker builds.

2. Extend the `make clean-not-downloaded` target to RocksJava, so that libraries needed as dependencies for the test suite are not deleted and re-downloaded unnecessarily.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6318

Differential Revision: D19608742

Pulled By: pdillinger

fbshipit-source-id: 25e25649e3e3212b537ac4512b40e2e53dc02ae7

Summary:

Clang analyzer was falsely reporting on use of txn=nullptr.

Added a new const variable so that it can properly prune impossible

control flows.

Also, 'make analyze' previously required setting USE_CLANG=1 as an

environment variable, not a make variable, or else compilation errors

like

g++: error: unrecognized command line option ‘-Wshorten-64-to-32’

Now USE_CLANG is not required for 'make analyze' (it's implied) and you

can do an incremental analysis (recompile what has changed) with

'USE_CLANG=1 make analyze_incremental'

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6244

Test Plan: 'make -j24 analyze', 'make crash_test'

Differential Revision: D19225950

Pulled By: pdillinger

fbshipit-source-id: 14f4039aa552228826a2de62b2671450e0fed3cb

Summary:

Currently, db_stress performs verification by calling `VerifyDb()` at the end of test and optionally before tests start. In case of corruption or incorrect result, it will be too late. This PR adds more verification in two ways.

1. For cf consistency test, each test thread takes a snapshot and verifies every N ops. N is configurable via `-verify_db_one_in`. This option is not supported in other stress tests.

2. For cf consistency test, we use another background thread in which a secondary instance periodically tails the primary (interval is configurable). We verify the secondary. Once an error is detected, we terminate the test and report. This does not affect other stress tests.

Test plan (devserver)

```

$./db_stress -test_cf_consistency -verify_db_one_in=0 -ops_per_thread=100000 -continuous_verification_interval=100

$./db_stress -test_cf_consistency -verify_db_one_in=1000 -ops_per_thread=10000 -continuous_verification_interval=0

$make crash_test

```

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6173

Differential Revision: D19047367

Pulled By: riversand963

fbshipit-source-id: aeed584ad71f9310c111445f34975e5ab47a0615

Summary:

Adds a python script to syntax check all python files in the

repository and report any errors.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6209

Test Plan:

'make check' with and without seeded syntax errors. Also look

for "No syntax errors in 34 .py files" on success, and in java_test CI output

Differential Revision: D19166756

Pulled By: pdillinger

fbshipit-source-id: 537df464b767260d66810b4cf4c9808a026c58a4

Summary:

And clean up related code, especially in stress test.

(More clean up of db_stress_test_base.cc coming after this.)

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6154

Test Plan: make check, make blackbox_crash_test for a bit

Differential Revision: D18938180

Pulled By: pdillinger

fbshipit-source-id: 524d27621b8dbb25f6dff40f1081e7c00630357e

Summary:

Error message when running `make` on Mac OS with master branch (v6.6.0):

```

$ make

$DEBUG_LEVEL is 1

Makefile:168: Warning: Compiling in debug mode. Don't use the resulting binary in production

third-party/folly/folly/synchronization/WaitOptions.cpp:6:10: fatal error: 'folly/synchronization/WaitOptions.h' file not found

#include <folly/synchronization/WaitOptions.h>

^

1 error generated.

third-party/folly/folly/synchronization/ParkingLot.cpp:6:10: fatal error: 'folly/synchronization/ParkingLot.h' file not found

#include <folly/synchronization/ParkingLot.h>

^

1 error generated.

third-party/folly/folly/synchronization/DistributedMutex.cpp:6:10: fatal error: 'folly/synchronization/DistributedMutex.h' file not found

#include <folly/synchronization/DistributedMutex.h>

^

1 error generated.

third-party/folly/folly/synchronization/AtomicNotification.cpp:6:10: fatal error: 'folly/synchronization/AtomicNotification.h' file not found

#include <folly/synchronization/AtomicNotification.h>

^

1 error generated.

third-party/folly/folly/detail/Futex.cpp:6:10: fatal error: 'folly/detail/Futex.h' file not found

#include <folly/detail/Futex.h>

^

1 error generated.

GEN util/build_version.cc

$DEBUG_LEVEL is 1

Makefile:168: Warning: Compiling in debug mode. Don't use the resulting binary in production

third-party/folly/folly/synchronization/WaitOptions.cpp:6:10: fatal error: 'folly/synchronization/WaitOptions.h' file not found

#include <folly/synchronization/WaitOptions.h>

^

1 error generated.

third-party/folly/folly/synchronization/ParkingLot.cpp:6:10: fatal error: 'folly/synchronization/ParkingLot.h' file not found

#include <folly/synchronization/ParkingLot.h>

^

1 error generated.

third-party/folly/folly/synchronization/DistributedMutex.cpp:6:10: fatal error: 'folly/synchronization/DistributedMutex.h' file not found

#include <folly/synchronization/DistributedMutex.h>

^

1 error generated.

third-party/folly/folly/synchronization/AtomicNotification.cpp:6:10: fatal error: 'folly/synchronization/AtomicNotification.h' file not found

#include <folly/synchronization/AtomicNotification.h>

^

1 error generated.

third-party/folly/folly/detail/Futex.cpp:6:10: fatal error: 'folly/detail/Futex.h' file not found

#include <folly/detail/Futex.h>

```

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6145

Differential Revision: D18910812

fbshipit-source-id: 5a4475466c2d0601657831a0b48d34316b2f0816

Summary:

db_stress_tool.cc now is a giant file. In order to main it easier to improve and maintain, break it down to multiple source files.

Most classes are turned into their own files. Separate .h and .cc files are created for gflag definiations. Another .h and .cc files are created for some common functions. Some test execution logic that is only loosely related to class StressTest is moved to db_stress_driver.h and db_stress_driver.cc. All the files are located under db_stress_tool/. The directory name is created as such because if we end it with either stress or test, .gitignore will ignore any file under it and makes it prone to issues in developements.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6134

Test Plan: Build under GCC7 with and without LITE on using GNU Make. Build with GCC 4.8. Build with cmake with -DWITH_TOOL=1

Differential Revision: D18876064

fbshipit-source-id: b25d0a7451840f31ac0f5ebb0068785f783fdf7d

Summary:

Before this fix, `make all` will emit full compilation command when building

object files in the third-party/folly directory even if default verbosity is

0 (AM_DEFAULT_VERBOSITY).

Test Plan (devserver):

```

$make all | tee build.log

$make check

```

Check build.log to verify.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6120

Differential Revision: D18795621

Pulled By: riversand963

fbshipit-source-id: 04641a8359cd4fd55034e6e797ed85de29ee2fe2

Summary:

Add the jni library for musl-libc, specifically for incorporating into Alpine based docker images. The classifier is `musl64`.

I have signed the CLA electronically.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/3143

Differential Revision: D18719372

fbshipit-source-id: 6189d149310b6436d6def7d808566b0234b23313

Summary:

**NOTE**: This also needs to be back-ported to be 6.4.6

Fix a regression introduced in f2bf0b2 by https://github.com/facebook/rocksdb/pull/5674 whereby the compiled library would get the wrong name on PPC64LE platforms.

On PPC64LE, the regression caused the library to be named `librocksdbjni-linux64.so` instead of `librocksdbjni-linux-ppc64le.so`.

This PR corrects the name back to `librocksdbjni-linux-ppc64le.so` and also corrects the ordering of conditional arguments in the Makefile to match the expected order as defined in the documentation for Make.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6080

Differential Revision: D18710351

fbshipit-source-id: d4db87ef378263b57de7f9edce1b7d15644cf9de

Summary:

* We can reuse downloaded 3rd-party libraries

* We can isolate the build to a Docker volume. This is useful for investigating failed builds, as we can examine the volume by assigning it a name during the build.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6079

Differential Revision: D18710263

fbshipit-source-id: 93f456ba44b49e48941c43b0c4d53995ecc1f404

Summary:

Had complications with LITE build and valgrind test.

Reverts/fixes small parts of PR https://github.com/facebook/rocksdb/issues/6007

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6036

Test Plan:

make LITE=1 all check

and

ROCKSDB_VALGRIND_RUN=1 DISABLE_JEMALLOC=1 make -j24 db_bloom_filter_test && ROCKSDB_VALGRIND_RUN=1 DISABLE_JEMALLOC=1 ./db_bloom_filter_test

Differential Revision: D18512238

Pulled By: pdillinger

fbshipit-source-id: 37213cf0d309edf11c483fb4b2fb6c02c2cf2b28

Summary:

Adds an improved, replacement Bloom filter implementation (FastLocalBloom) for full and partitioned filters in the block-based table. This replacement is faster and more accurate, especially for high bits per key or millions of keys in a single filter.

Speed

The improved speed, at least on recent x86_64, comes from

* Using fastrange instead of modulo (%)

* Using our new hash function (XXH3 preview, added in a previous commit), which is much faster for large keys and only *slightly* slower on keys around 12 bytes if hashing the same size many thousands of times in a row.

* Optimizing the Bloom filter queries with AVX2 SIMD operations. (Added AVX2 to the USE_SSE=1 build.) Careful design was required to support (a) SIMD-optimized queries, (b) compatible non-SIMD code that's simple and efficient, (c) flexible choice of number of probes, and (d) essentially maximized accuracy for a cache-local Bloom filter. Probes are made eight at a time, so any number of probes up to 8 is the same speed, then up to 16, etc.

* Prefetching cache lines when building the filter. Although this optimization could be applied to the old structure as well, it seems to balance out the small added cost of accumulating 64 bit hashes for adding to the filter rather than 32 bit hashes.

Here's nominal speed data from filter_bench (200MB in filters, about 10k keys each, 10 bits filter data / key, 6 probes, avg key size 24 bytes, includes hashing time) on Skylake DE (relatively low clock speed):

$ ./filter_bench -quick -impl=2 -net_includes_hashing # New Bloom filter

Build avg ns/key: 47.7135

Mixed inside/outside queries...

Single filter net ns/op: 26.2825

Random filter net ns/op: 150.459

Average FP rate %: 0.954651

$ ./filter_bench -quick -impl=0 -net_includes_hashing # Old Bloom filter

Build avg ns/key: 47.2245

Mixed inside/outside queries...

Single filter net ns/op: 63.2978

Random filter net ns/op: 188.038

Average FP rate %: 1.13823

Similar build time but dramatically faster query times on hot data (63 ns to 26 ns), and somewhat faster on stale data (188 ns to 150 ns). Performance differences on batched and skewed query loads are between these extremes as expected.

The only other interesting thing about speed is "inside" (query key was added to filter) vs. "outside" (query key was not added to filter) query times. The non-SIMD implementations are substantially slower when most queries are "outside" vs. "inside". This goes against what one might expect or would have observed years ago, as "outside" queries only need about two probes on average, due to short-circuiting, while "inside" always have num_probes (say 6). The problem is probably the nastily unpredictable branch. The SIMD implementation has few branches (very predictable) and has pretty consistent running time regardless of query outcome.

Accuracy

The generally improved accuracy (re: Issue https://github.com/facebook/rocksdb/issues/5857) comes from a better design for probing indices

within a cache line (re: Issue https://github.com/facebook/rocksdb/issues/4120) and improved accuracy for millions of keys in a single filter from using a 64-bit hash function (XXH3p). Design details in code comments.

Accuracy data (generalizes, except old impl gets worse with millions of keys):

Memory bits per key: FP rate percent old impl -> FP rate percent new impl

6: 5.70953 -> 5.69888

8: 2.45766 -> 2.29709

10: 1.13977 -> 0.959254

12: 0.662498 -> 0.411593

16: 0.353023 -> 0.0873754

24: 0.261552 -> 0.0060971

50: 0.225453 -> ~0.00003 (less than 1 in a million queries are FP)

Fixes https://github.com/facebook/rocksdb/issues/5857

Fixes https://github.com/facebook/rocksdb/issues/4120

Unlike the old implementation, this implementation has a fixed cache line size (64 bytes). At 10 bits per key, the accuracy of this new implementation is very close to the old implementation with 128-byte cache line size. If there's sufficient demand, this implementation could be generalized.

Compatibility

Although old releases would see the new structure as corrupt filter data and read the table as if there's no filter, we've decided only to enable the new Bloom filter with new format_version=5. This provides a smooth path for automatic adoption over time, with an option for early opt-in.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/6007

Test Plan: filter_bench has been used thoroughly to validate speed, accuracy, and correctness. Unit tests have been carefully updated to exercise new and old implementations, as well as the logic to select an implementation based on context (format_version).

Differential Revision: D18294749

Pulled By: pdillinger

fbshipit-source-id: d44c9db3696e4d0a17caaec47075b7755c262c5f

Summary:

From bzip2's official [download page](http://www.bzip.org/downloads.html), we could download it from sourceforge. This source would be more credible than previous web archive.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5995

Differential Revision: D18377662

fbshipit-source-id: e8353f83d5d6ea6067f78208b7bfb7f0d5b49c05

Summary:

include db_stress_tool in rocksdb tools lib

Test Plan (on devserver):

```

$make db_stress

$./db_stress

$make all && make check

```

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5950

Differential Revision: D18044399

Pulled By: riversand963

fbshipit-source-id: 895585abbbdfd8b954965921dba4b1400b7af1b1

Summary:

expose db stress test by providing db_stress_tool.h in public header.

This PR does the following:

- adds a new header, db_stress_tool.h, in include/rocksdb/

- renames db_stress.cc to db_stress_tool.cc

- adds a db_stress.cc which simply invokes a test function.

- update Makefile accordingly.

Test Plan (dev server):

```

make db_stress

./db_stress

```

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5937

Differential Revision: D17997647

Pulled By: riversand963

fbshipit-source-id: 1a8d9994f89ce198935566756947c518f0052410

Summary:

Example: using the tool before and after PR https://github.com/facebook/rocksdb/issues/5784 shows that

the refactoring, presumed performance-neutral, actually sped up SST

filters by about 3% to 8% (repeatable result):

Before:

- Dry run ns/op: 22.4725

- Single filter ns/op: 51.1078

- Random filter ns/op: 120.133

After:

+ Dry run ns/op: 22.2301

+ Single filter run ns/op: 47.4313

+ Random filter ns/op: 115.9

Only tests filters for the block-based table (full filters and

partitioned filters - same implementation; not block-based filters),

which seems to be the recommended format/implementation.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5825

Differential Revision: D17804987

Pulled By: pdillinger

fbshipit-source-id: 0f18a9c254c57f7866030d03e7fa4ba503bac3c5

Summary:

Make class ObsoleteFilesTest inherit from DBTestBase.

Test plan (on devserver):

```

$COMPILE_WITH_ASAN=1 make obsolete_files_test

$./obsolete_files_test

```

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5820

Differential Revision: D17452348

Pulled By: riversand963

fbshipit-source-id: b09f4581a18022ca2bfd79f2836c0bf7083f5f25

Summary:

Refactoring to consolidate implementation details of legacy

Bloom filters. This helps to organize and document some related,

obscure code.

Also added make/cpp var TEST_CACHE_LINE_SIZE so that it's easy to

compile and run unit tests for non-native cache line size. (Fixed a

related test failure in db_properties_test.)

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5784

Test Plan:

make check, including Recently added Bloom schema unit tests

(in ./plain_table_db_test && ./bloom_test), and including with

TEST_CACHE_LINE_SIZE=128U and TEST_CACHE_LINE_SIZE=256U. Tested the

schema tests with temporary fault injection into new implementations.

Some performance testing with modified unit tests suggest a small to moderate

improvement in speed.

Differential Revision: D17381384

Pulled By: pdillinger

fbshipit-source-id: ee42586da996798910fc45ac0b6289147f16d8df

Summary:

This will allow us to fix history by having the code changes for PR#5784 properly attributed to it.

Pull Request resolved: https://github.com/facebook/rocksdb/pull/5810

Differential Revision: D17400231

Pulled By: pdillinger

fbshipit-source-id: 2da8b1cdf2533cfedb35b5526eadefb38c291f09